Unlocking Accurate AI Answers: The Power of RAG for Business

Unlocking Accurate AI Answers: The Power of RAG for Business

AI isn’t just about answering questions anymore; it’s about answering them accurately, with your company’s real-world data, and doing so in a way that’s secure, scalable, and reliable. Whether you’re building a legal research assistant, a customer support chatbot, or an internal tool that can summarize hundreds of documents in seconds, there’s one approach that’s powering the next wave of business-ready AI: Retrieval-Augmented Generation (RAG).

RAG bridges the gap between powerful AI models like GPT or Claude and the proprietary information your business runs on. Instead of relying on what the AI already “knows” (which is limited to the data it was trained on months or even years ago), RAG lets you inject real-time, relevant, and context-rich information directly into the AI’s reasoning process.

In other words, it’s how you turn a clever AI into a trustworthy business partner.

Why RAG Matters

A common misconception in AI adoption is that to make a model “know your business,” you need to fine-tune it on all your internal documents. In reality, fine-tuning is about giving an AI new skills or writing styles — not keeping it updated with your constantly changing data. Even if you fine-tuned a model on your company’s materials, that knowledge would be frozen at the moment of training.

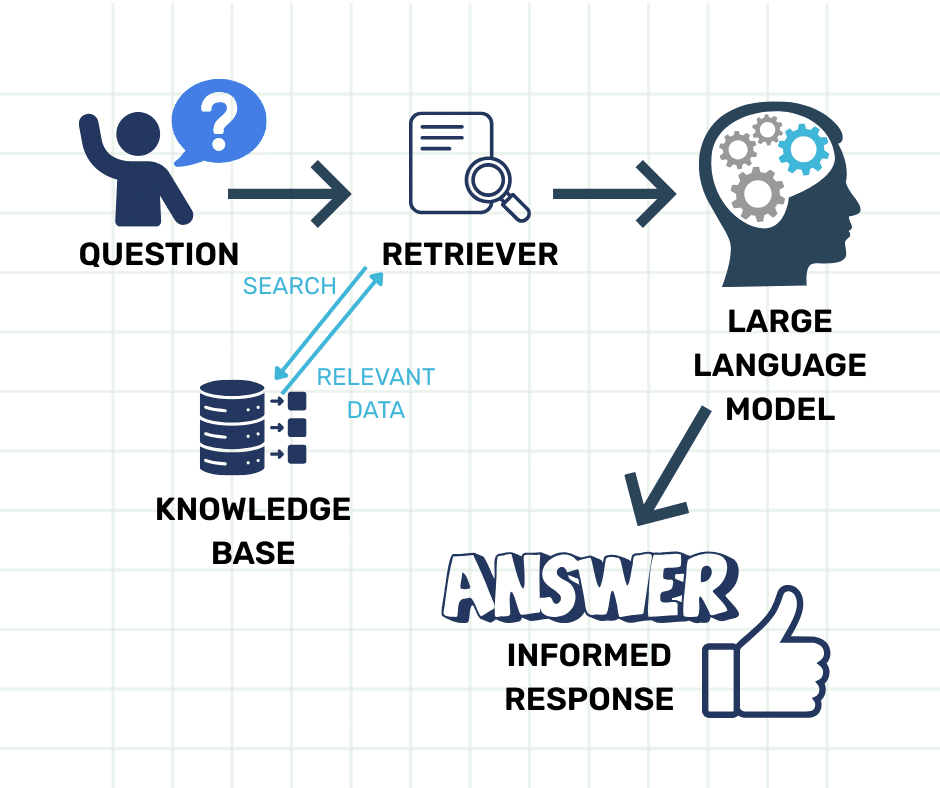

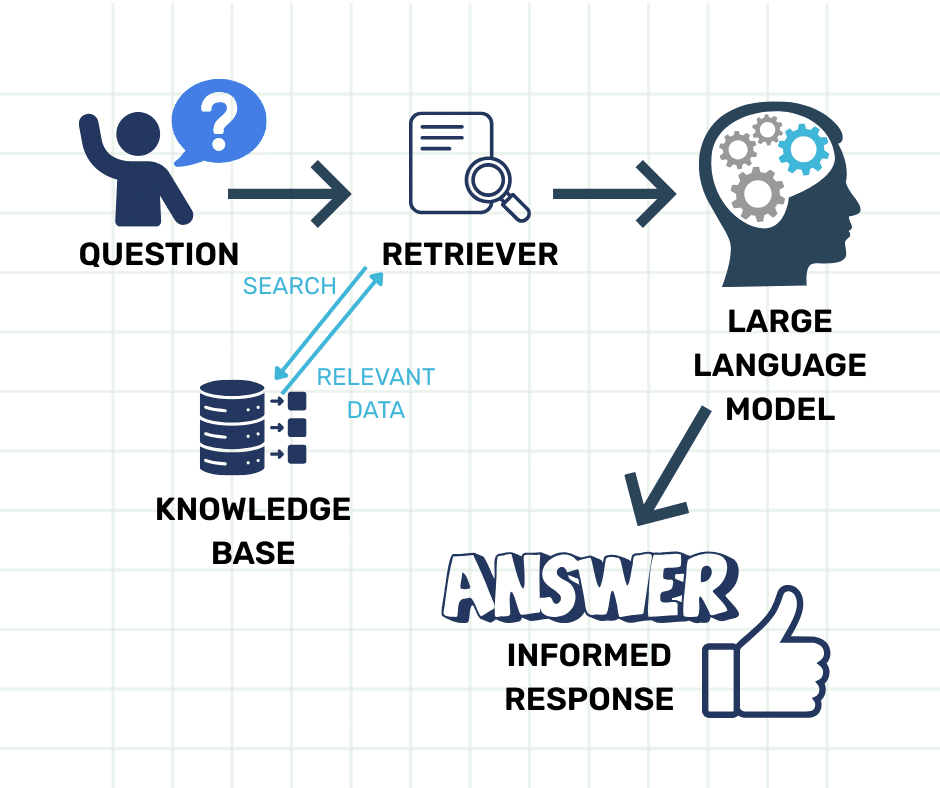

Retrieval-Augmented Generation (RAG) solves this problem by bringing fresh, relevant data into the AI’s reasoning process at the moment you need it:

Retrieve: When the AI receives a query, a semantic search system matches it against your private databases, document collections, or APIs, returning the most relevant text “chunks” or records.

Augment: These results are injected into the AI’s context window alongside your original question, so the model can reason with both its general knowledge and your specific, current data.

Generate: The AI produces an answer that blends its built-in reasoning with the retrieved information, giving responses that reflect your latest data without retraining — and without exposing your full dataset all at once.

This means every answer reflects the most current information available, without retraining, and without exposing your entire dataset to the model all at once.

How RAG and MCP Work Together

While RAG handles the search and context injection part of the equation, it still needs a way to connect to your data sources and business tools. That’s where Model Context Protocol (MCP), which we covered in our recent blog post comes in. (You can read our blog on MCPs here). MCP standardizes how AI models securely fetch and use external data in real time.

Think of it like this:

- RAG is your AI’s librarian, fetching the right books for each question.

- MCP is the library’s intercom system and tool bench, telling the librarian which resources exist and letting them take action after answering.

Together, they make AI not just informed, but operational — capable of retrieving accurate data and then acting on it, all within your company’s rules and workflows.

For more on MCP functionality and utilization opportunities, see our blog, MCP: The AI Workflow Tool Your Business Needs.

RAG in Action

Let’s look at how RAG works in practice:

Healthcare Provider: A patient portal chatbot needs to answer questions about upcoming appointments and recent lab results. With RAG, the AI retrieves the latest records from multiple secure systems, augments its prompt with that information, and generates a HIPAA-compliant response, all without a human ever having to dig through multiple databases.

Financial Services: An advisor-facing tool retrieves the latest market trends, compliance regulations, and a client’s personal investment portfolio. The AI then uses this augmented context to create personalized, compliant recommendations that are ready to share with the client.

In both cases, RAG ensures that the answers aren’t just plausible; they’re grounded in real data from your systems.

Future-Proofing Your AI Stack

The real beauty of RAG is that it’s model agnostic. You can swap LLM providers as new ones emerge, without redoing your entire system. By pairing it with MCP, you get modular, secure access to both your data and your operational tools.

In 2025 and beyond, companies that combine RAG for accurate context with MCP for standardized tool access will have a serious competitive edge. You’ll be building AI that doesn’t just “sound smart”, it’s grounded in facts, aligned with your business logic, and capable of taking action.

Partner with Experts: How Rubico Can Help

At Rubico, we help organizations design and implement enterprise-grade RAG pipelines that integrate seamlessly with MCP and your existing systems. Whether you need:

- Secure, compliant retrieval of sensitive data

- Chatbots that provide up-to-date, reliable answers

- Workflow automation that’s grounded in real business context

- Cross-platform AI integration with your tech stack

We guide you from the first prototype to production-level deployment, making sure your AI is not just impressive, but indispensable.

If you’re exploring how AI can meaningfully support your team, let’s talk. RAG is the future of enterprise-grade AI, and Rubico makes it real. Reach out to our team here.